Image Upscaling using a Convolutional Neural Network

A group project in which Neural Networks are investigated in order to create a Convolutional Neural Network which is used to upscale low resolution game textures.

What is a CNN?

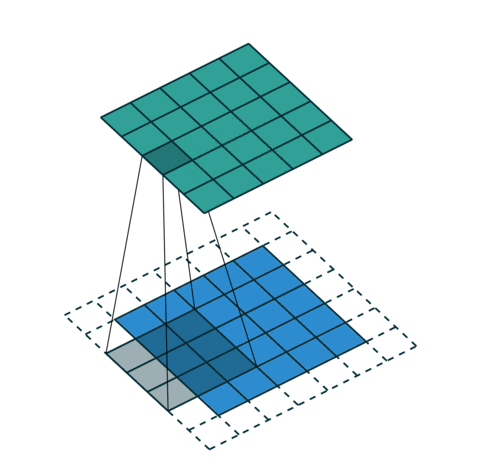

A Convolutional Neural Network (CNN) is a network which can break down the data within an image in order to highlight features in order to allow for training on the network inorder to create output filters. This is done through multiple convolutional and pooling layers which then feed into a connected network in order to process the image into a classification.

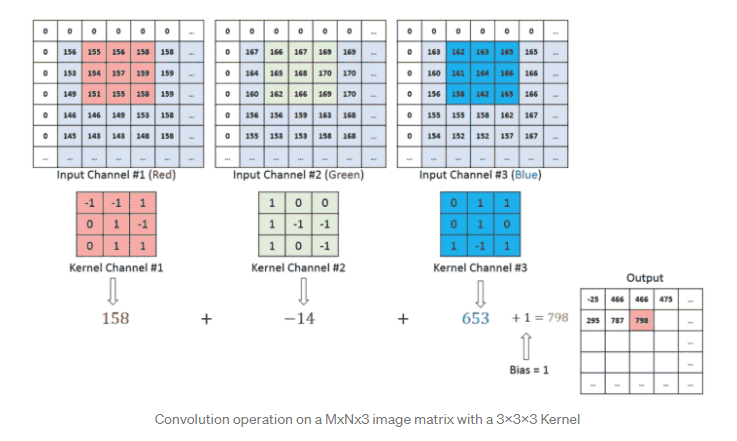

The Convolutional Layers are used in order to break down all the image pixel data into different layers. This could be stored for R,G,B colours meaning 3 layers are used to store the data relative to each pixel within the image, these layers can be changed depending on the image. These layers are then stored into kernels, this is used to store a convoluted feature. This is stored through casting an area of the image (3x3) and storing the resulting matrix within the kernel layer. The casting process moves across the images in strides by moving one across until the end of the image then repeating this for all rows. With each kernel storing each colour channel these individual kernels can be added together with a bias to get an output variable.

With reference to upscaling and downscaling images padding can be applied to the kernel's process images which can have their original size changed. Same Padding is applied to images in which the images size remains the same, valid padding allows the edge variables to be extended out with the original size.

With reference to upscaling and downscaling images padding can be applied to the kernel's process images which can have their original size changed. Same Padding is applied to images in which the images size remains the same, valid padding allows the edge variables to be extended out with the original size.

Our CNN

A breakdown of our network architecture is shown below.

inputs = tf.keras.Input(shape=(128, 128, 3))

# Data Augmentation

x = data_augmentation(inputs)

# Convolutional Base

x = layers.UpSampling2D((2,2))(x)

x = layers.UpSampling2D((2,2))(x)

x = layers.UpSampling2D((2,2))(x)

x = layers.Conv2D(filters=32, kernel_size=6, activation="relu", padding="same")(x)

x = layers.Conv2D(filters=16, kernel_size=1, activation="relu", padding="same")(x)

outputs = layers.Conv2D(filters=3, kernel_size=3, padding="same")(x)

model = tf.keras.Model(inputs, outputs)

Further breakdowns can be located below with the Programmers Manual showing the process required to create this CNN, a User's Manual showing how to use the application within the Unity Editor or as an Application and the GitHub Repository where this work can be found and downloaded.